My Deep Dive into LangChain: From Basics to Analyzing 1,000 LLM Responses

I explored LangExtract, a Gemini-powered library designed to turn unstructured text into structured data.

My Deep Dive into LangExtract: From Basics to Analyzing 1,000 LLM Responses

I recently spent time researching LangExtract to understand exactly what it is, how it works, and why it has become a powerful tool for developers working with unstructured text. This is a breakdown of what I found, written as I learned it.

What is LangExtract?

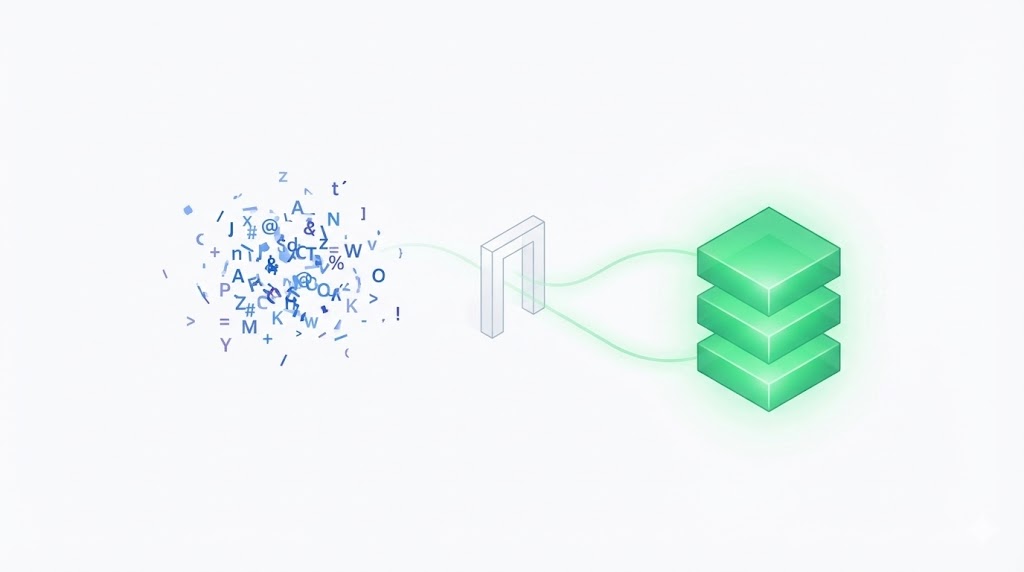

In simple terms, I found that LangExtract is a system designed to turn messy, unstructured text into clean, structured data.

When I first started looking into processing text with AI, I realized that getting a model to reliably extract specific details (like names, dates, or sentiments) is difficult. You often end up with data that is hard to verify because you don't know exactly where in the text the model found the answer.

LangExtract solves this by acting as a specialized processor. It connects your raw documents to an AI model (specifically Google's Gemini) and enforces strict rules on the output. It doesn't just give you an answer; it provides precise source grounding. This means every piece of data it extracts is mapped back to the exact location in the original text.

Core Principles I Discovered

As I dug deeper, I identified the architectural concepts that make this tool unique:

- Precise Source Grounding: This is the standout feature. Unlike standard AI summaries that just generate new text, LangExtract maps every extracted entity back to its source. If it identifies a "Tone" as "Anxious," it points to the exact phrase in the text that indicates anxiety. This makes the data verifiable.

- Example-Based Learning: Instead of writing complex rules or code to define what you want, LangExtract uses a "few-shot" approach. You provide a prompt and a single high-quality example of the structure you want. The system uses this example as a blueprint to process new, unseen data.

- Structure Enforcement: The system ensures that the output always matches the specific format you need—whether that is a table, a list, or a complex hierarchy—regardless of how messy the original text is.

Potential Use Cases

Based on my research, here are the three most common ways LangExtract is applied:

- Clinical & Medical Extraction: It processes clinical notes to extract medications and diagnoses, linking them back to the doctor's original notes for safety and verification.

- Legal & Financial Analysis: It pulls specific clauses or financial figures from long reports. The "source grounding" is critical here because you need to prove exactly where a number came from in a contract.

- Data Labeling & Analysis: It processes thousands of reviews or survey responses, extracting themes and sentiment scores into a structured format for quantitative analysis.

My Project: Analyzing Variance in 1,000 AI Responses

To truly understand how LangExtract handles structure and scale, I designed a simple experiment.

The Hypothesis: AI models are non-deterministic. If I ask a model to "Explain the concept of 'freedom'" 1,000 times, the answers will vary. I wanted to measure how much they vary in tone and content.

The Goal: Take 1,000 raw text definitions of "freedom" and use LangExtract to structure them into data I could mathematically analyze.

Step 1: The Input Data

First, I generated my dataset: 1,000 different one-sentence definitions of "freedom." Now I had a pile of unstructured text that I needed to turn into data.

Step 2: Designing the Blueprint

I needed to tell the system what to look for. Instead of writing code, I created a schema definition.

I defined two specific fields I wanted to extract:

- Main Concept: The core philosophical idea.

- Tone: The emotional weight of the sentence.

To ensure accuracy, I provided one "gold standard" example. I showed the system a sample sentence and manually labeled the Concept and Tone myself. This served as the teaching guide for the engine.

Step 3: The Extraction Engine

I fed the 1,000 sentences into the LangExtract processor.

This is where the architecture shined. The system didn't just "read" the text; it analyzed each sentence against my blueprint. It looked for the Concept and Tone, extracted them, and simultaneously recorded the "coordinates" (start and end points) of where those words existed in the sentence.

Step 4: The Analysis

Once processed, I didn't just have text strings; I had a structured database.

I compared the results:

- Concept Variance: I grouped the results to see which definitions were most common.

- Tone Consistency: I analyzed the "Tone" ratings.

What I Found: The analysis showed that while the definitions were similar, the emotional tone varied significantly. Because LangExtract linked every "Tone" rating back to specific words (e.g., "burden" vs. "liberty"), I could prove exactly which words were driving the negative or positive sentiment.

Conclusion

This project taught me that LangExtract is a game-changer for data reliability. By using Source Grounding and Example-Based Blueprints, I turned 1,000 distinct, messy sentences into a verifiable dataset without writing complex parsing logic. It bridges the gap between the messy reality of human language and the structured needs of data analysis.